Polluted data poses risk to AI safety, ministry says

The Ministry of State Security issued a stark warning on Tuesday about artificial intelligence security risks stemming from contaminated training data, calling it a fundamental challenge to AI safety.

In an article published on its official WeChat account, the ministry said AI data sources are often polluted by mixed-quality content containing false information, fabricated narratives and biased viewpoints. As AI is increasingly integrated into China's socioeconomic sectors, such contamination poses risks to high-quality development and national security, it said.

Data serves as the essential foundation for AI systems, providing the raw material for models to learn patterns, make decisions and generate content, the ministry said. It warned that compromised data quality directly undermines model reliability. Citing research, it noted that even a small contamination level — such as 0.01 percent of false text — can increase harmful outputs by 11.2 percent.

The ministry also highlighted the danger of "recursive pollution", in which false content generated by AI becomes part of training datasets for future models, leading to compounding errors. Real-world risks include financial market manipulation through fabricated information, public panic triggered by misinformation and life-threatening medical misjudgments from corrupted diagnostic algorithms, it said.

To counter these threats, the ministry proposed stricter source supervision under current cybersecurity and data protection laws, comprehensive risk assessments and systematic data-cleansing frameworks. It pledged to collaborate with relevant agencies to safeguard AI and data security under China's national security framework.

Zhang Xi, deputy dean and professor at the School of Cyberspace Security at the Beijing University of Posts and Telecommunications, said China faces particular vulnerability due to a shortage of high-quality Chinese-language training data. Chinese data makes up only 1.3 percent of global large-model datasets, he said.

This scarcity, along with copyright restrictions and inadequate data infrastructure, has forced domestic developers to rely on lower-quality sources such as machine-translated or synthetic content, which worsens data pollution and hinders progress in Chinese AI development, he said.

Zhang cited the GPT-3 model, which was trained on 750 gigabytes of data, and China's DeepSeek-V3 model, trained on 14.8 trillion high-quality text fragments. These datasets are drawn from massive libraries of books, academic papers, online texts and code. But due to their scale, manual inspection is neither feasible nor cost-effective, making data contamination an increasingly serious bottleneck, he said.

Polluted training data also creates unpredictable risks in high-stakes fields such as medicine, autonomous driving and national defense, Zhang said. He cited a study in which the insertion of 5,000 fabricated medical records raised misdiagnosis rates by 73 percent. In another example, inserting three manipulated image frames caused autonomous vehicles to mistake pedestrians for garbage bags, leading to 92 percent collision rates in testing.

Zhang also warned of malicious data poisoning campaigns, in which adversarial actors inject content contrary to China's core socialist values. He pointed to foreign-developed models that generated separatist content related to the Xizang autonomous region as an example.

To protect data sovereignty, Zhang advocated for greater investment in domestic data collection and the establishment of national public data platforms. He also called for legal mechanisms to criminalize malicious data poisoning and assign liability for data contamination caused by negligence, with responsibilities clarified for developers, data providers and operators.

Shen Yang, a professor at Tsinghua University's School of Journalism and Communication and College of AI, defined AI data pollution as the inclusion of erroneous, incomplete, biased or deliberately manipulated content in training data.

This fundamentally weakens AI models' comprehension, judgment and output reliability, he said.

Shen compared polluted training data to "cooking with spoiled ingredients".

He said malicious actors may seek to manipulate AI on sensitive topics, mislead the public, undermine competitors or probe vulnerabilities in AI systems. While such acts are usually isolated rather than coordinated conspiracies, their cumulative impact can erode public trust in AI, he said.

For the general public, Shen said it is essential to understand that AI-generated content can shape — or distort — their perception of reality. "They need to see through the logic behind AI, just like identifying the motives behind people's words," he said.

- Polluted data poses risk to AI safety, ministry says

- World Games to see unique opening

- Novelist captures shared culture of China's old alleyway communities

- Private war memorial hall in Shanxi draws 3.4 million visitors in 11 years

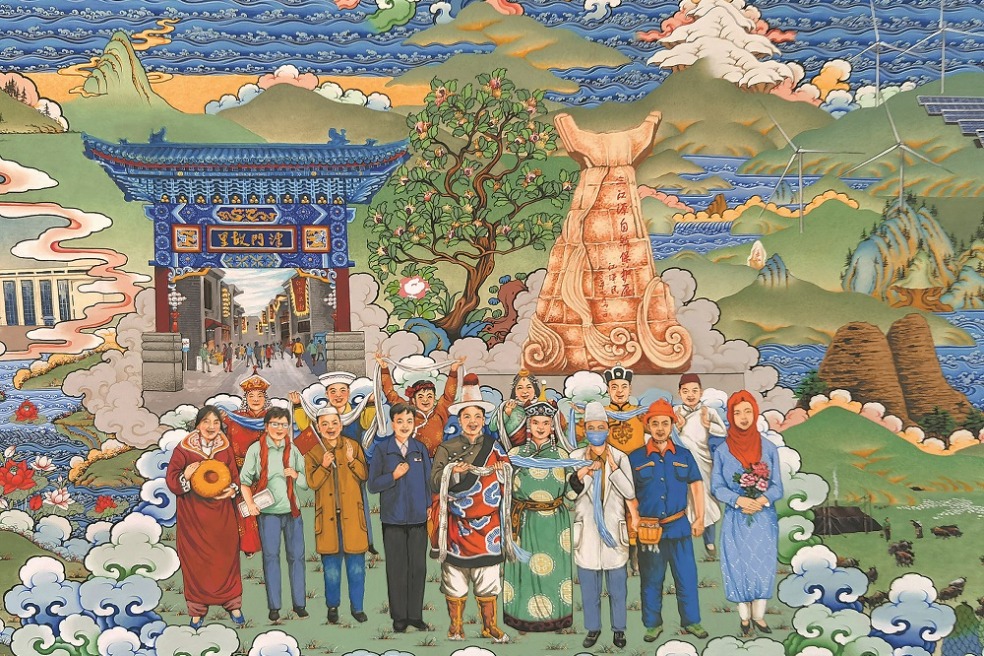

- Aid program advances Xizang's development

- Ex-AVIC chief faces corruption charges